Table of Contents

Introduction

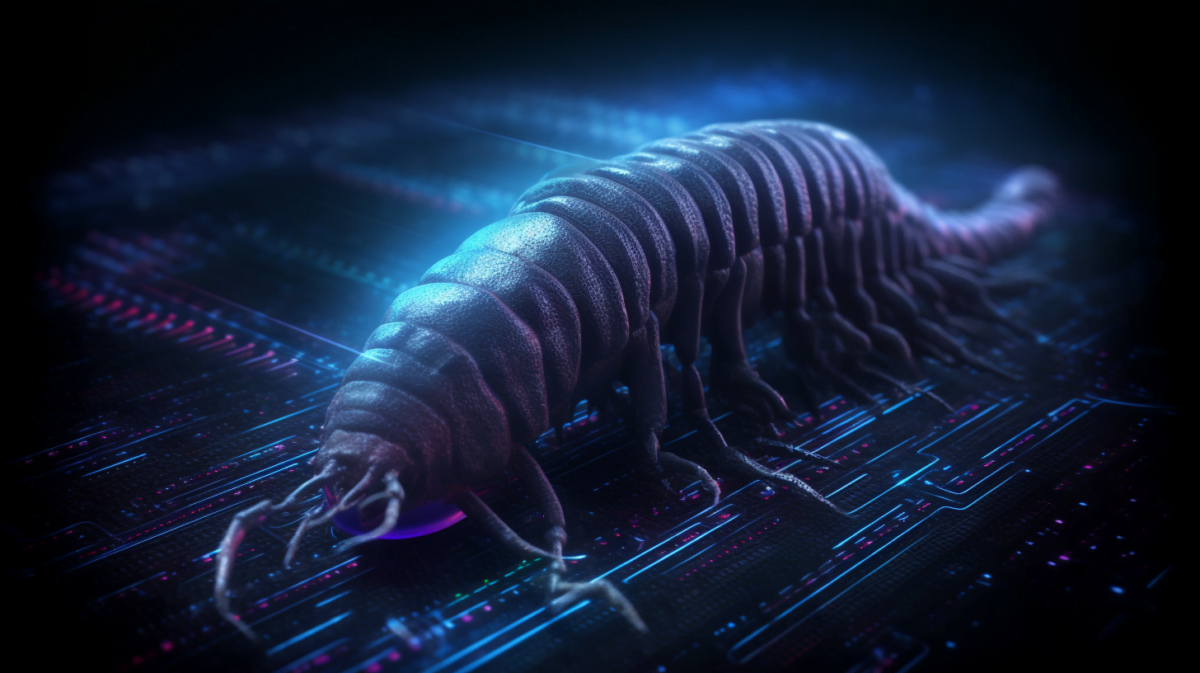

Artificial Intelligence (AI) has undeniably transformed various industries, but with progress comes new challenges. One such challenge is the emergence of AI worms, a cyber threat that exploits generative AI systems to autonomously spread between systems. In this article, we will delve into the world of AI worms, exploring their definition, unique characteristics, and the potential risks they pose to interconnected AI ecosystems.

Definition of AI Worms

AI worms are a novel form of cyber threat that capitalizes on generative AI capabilities. Unlike traditional computer worms, AI worms specifically target AI-powered systems and applications, employing adversarial self-replicating prompts to propagate through these systems.

How AI Worms Differ from Traditional Worms

While traditional computer worms self-replicate and spread to other computers, AI worms take it a step further by infiltrating AI systems. This key distinction makes them a formidable threat in the rapidly evolving landscape of cybersecurity.

The Rise of Morris II

Development and Purpose

Researchers from Cornell University, Technion-Israel Institute of Technology, and Intuit have recently unveiled Morris II, an AI worm designed to exploit generative AI email assistants. Named in homage to the disruptive Morris computer worm of 1988, Morris II showcases the potential risks and vulnerabilities in autonomous AI ecosystems.

Targeting Generative AI Email Assistants

Morris II’s primary target is generative AI email assistants, where it can steal data and disseminate spam. This poses a serious threat to prominent AI models like ChatGPT and Gemini, highlighting the need for robust security measures in the AI community.

Implications for AI-Powered Systems

Risks and Vulnerabilities

The research surrounding AI worms underscores the risks and vulnerabilities associated with interconnected and autonomous AI ecosystems. The potential for data theft and unauthorized actions demands immediate attention from the AI development community.

Breaching Security Measures

Morris II’s infiltration of generative AI email assistants demonstrates the capability of AI worms to breach security measures. Secure application design, human oversight, and proactive monitoring are essential to mitigate these risks effectively.

Morris II in Action

Data Theft

Morris II’s ability to infiltrate generative AI systems extends to the extraction of sensitive data. This raises concerns about privacy and the security of information handled by AI applications.

Spam Dissemination

Apart from data theft, Morris II is adept at disseminating spam through compromised AI systems. This not only impacts the performance of AI models but also poses reputational risks for organizations relying on these technologies.

Lessons from the Past: The Original Morris Worm

The nomenclature of Morris II pays homage to the original Morris worm, which wreaked havoc in 1988. Drawing lessons from the past, it becomes evident that history repeats itself, emphasizing the importance of staying vigilant against evolving cyber threats.

Mitigating Risks: A Call to Action

Importance of Secure Application Design

The development of AI worms reinforces the crucial role of secure application design. Implementing robust security protocols at the application level is imperative to prevent unauthorized access and mitigate potential threats.

Human Oversight in AI Operations

The research highlights the necessity of human oversight in AI operations. While AI systems are designed to operate autonomously, human intervention is essential to detect and respond to unusual patterns and potential security breaches.

Monitoring Unusual Patterns in AI Systems

Proactive monitoring is key to identifying and addressing unusual patterns within AI systems. This requires constant vigilance and the implementation of advanced monitoring tools to detect potential threats before they escalate.

Generative AI Worms in the Wild

As of now, generative AI worms have not been observed in the wild. However, experts and researchers emphasize the potential threat they pose, urging startups, developers, and tech companies to be proactive in addressing this emerging risk.

Recognizing the Threat

Recognizing the threat of AI worms is the first step towards developing effective countermeasures. By understanding the potential consequences and vulnerabilities, the AI community can work collaboratively to fortify defenses.

Traditional Security Measures

To defend against AI worms, traditional security measures such as firewalls, antivirus software, and secure network configurations remain crucial. These foundational defenses form the first line of protection against potential infiltrations.

Vigilant Application Design

Vigilant application design involves anticipating potential vulnerabilities and addressing them during the development phase. By prioritizing security features, developers can create robust applications that are less susceptible to AI worm attacks.

The Future of AI Security

Prioritizing Security in AI Development

The emergence of AI worms serves as a wake-up call for the AI development community to prioritize security. Incorporating security measures into the very fabric of AI systems is essential to safeguard against evolving cyber threats.

Addressing the Autonomous Spread of AI Worms

As AI-worms have the potential to spread autonomously between AI agents, addressing this unique vector for cyberattacks requires innovative solutions. Collaboration, research, and the implementation of advanced security protocols are paramount.

Conclusion

In conclusion, the advent of AI worms introduces a new dimension to cybersecurity. Morris II and its counterparts underscore the need for a proactive approach to security in AI development. By learning from historical incidents and embracing vigilant practices, the AI community can navigate the challenges posed by AI worms and fortify the future of AI.

FAQs

Are AI worms currently in the wild?

As of now, there have been no reported instances of AI worms in the wild. However, experts caution against complacency, emphasizing the need for proactive measures.

How can organizations defend against AI worms?

Defending against AI worms requires a multi-faceted approach, including traditional security measures, vigilant application design, and prioritizing security in AI development.

What is the significance of Morris II targeting generative AI email assistants?

Morris II’s focus on generative AI email assistants highlights the vulnerability of widely used AI models, necessitating heightened security measures in this domain.

Why is human oversight crucial in AI operations?

Human oversight is essential to detect and respond to unusual patterns in AI systems, providing an extra layer of defense against potential security breaches.

How can the AI community address the autonomous spread of AI worms?

Addressing the autonomous spread of AI worms requires collaborative efforts, research, and the implementation of innovative security protocols to stay ahead of evolving cyber threats.